Dialogue in Resonance

An Interactive Music Piece for the Piano and Real-Time Automatic Transcription System (ICMC 2025)

Abstract

Dialogue in Resonance is an interactive music piece for a human pianist and a computer-controlled (AI) piano that integrates real-time automatic music transcription into a score-driven framework. Unlike previous approaches that primarily focus on improvisation-based interactions, our work establishes a balanced framework that combines composed structure and dynamic interaction. Through real-time automatic transcription as its core mechanism, the computer interprets and responds to the human performer's performance in real time, creating a musical dialogue that balances compositional intent with live interaction including unpredictability.

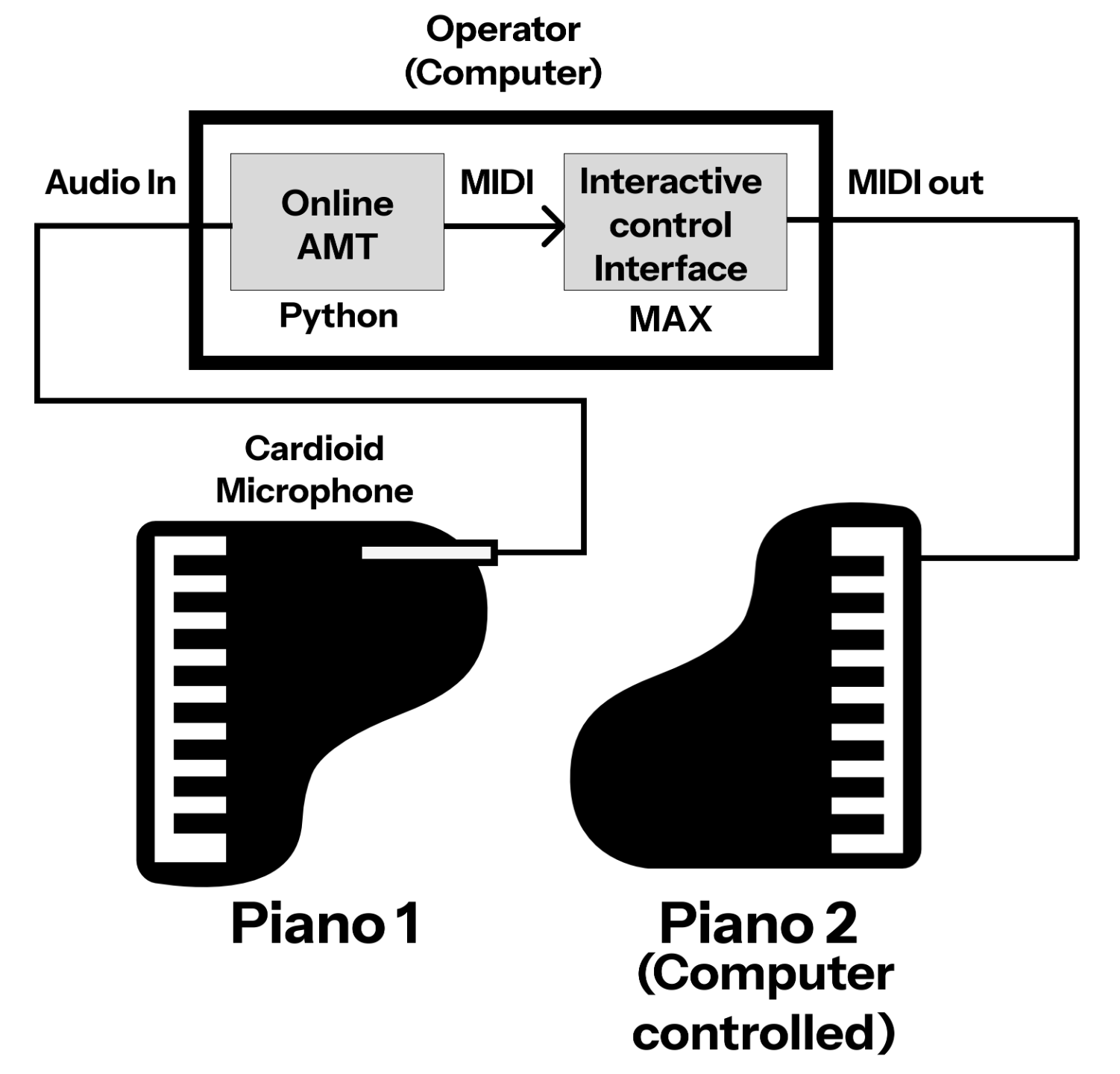

System Overview

The system architecture consists of two main components: a Python-based online AMT (Automatic Music Transcription) model that processes the audio in real time, and a Max/MSP interface that provides MIDI processing functions and real-time adjustments. The AMT model can achieve an onset F1 score of over 95% in ideal conditions, with an approximate latency of 350ms.

Full Score

The full score of Dialogue in Resonance is available for download.

However, our Max/MSP patch is not publicly available at this time, as it requires further organization.

If you are interested in performing this piece, please contact hayeonbang@kaist.ac.kr.